Returning in 2022. Dates to be confirmed

Click here to Visit Autonomous Vehicle Test & Development 'Virtual' Live

Returning in 2022. Dates to be confirmed

Click here to Visit Autonomous Vehicle

Test & Development 'Virtual' Live

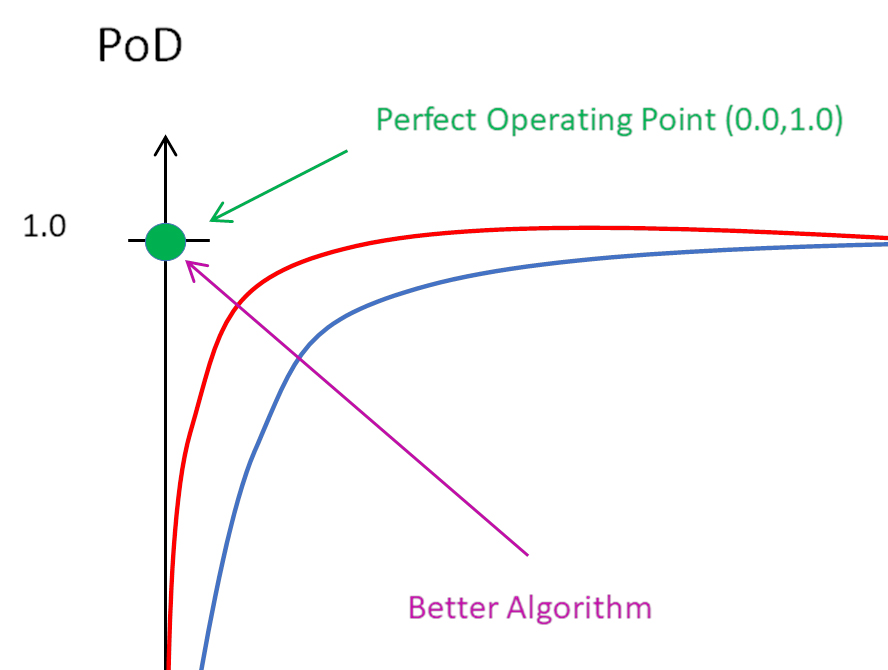

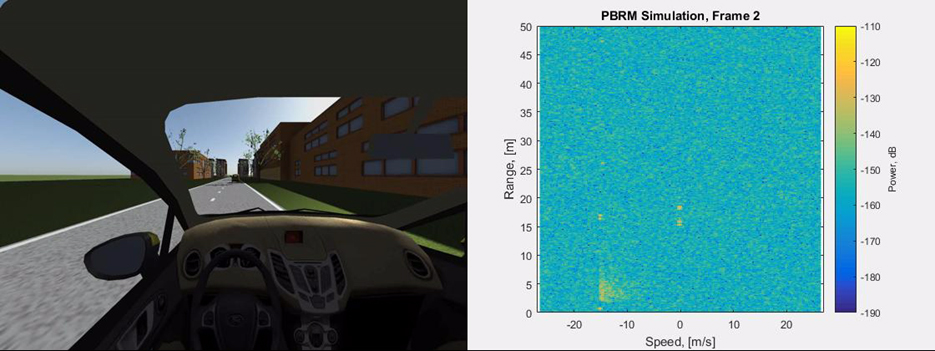

Tony Gioutsos, director of portfolio development for autonomous at Siemens, talks us through a simulation-based verification and validation procedure of AVs that’s truly robust. He will talk more about this in his presentation at the Autonomous Vehicle Test & Development Symposium. For more information, up-to-date programs and rates, click

Tony Gioutsos, director of portfolio development for autonomous at Siemens, talks us through a simulation-based verification and validation procedure of AVs that’s truly robust. He will talk more about this in his presentation at the Autonomous Vehicle Test & Development Symposium. For more information, up-to-date programs and rates, click